I’m sure you have seen this interesting rift between programmers that goes beyond the friendly competition about who favors which IDE or which programming language has the nicer syntax — the rift that extends to the very core of how we navigate the systems in front of us.

In essence, there are two kinds of people when it comes to computer navigation: Those who rely on the mouse and can’t understand why anyone would rather type text and on the other side, there’s us few who have seen the light and prefer using the keyboard as much as possible. Here are some good reasons.

Keyboard is MUCH faster (Once you get used to it)

If I need to convince you, let me give you the easiest of examples that probably everyone understands: Control-C/V instead of reaching for the mouse, right-clicking, finding the right menu item in a million of choices, then doing it all again for the pasting. Nearly everyone I know has long understood to use the keyboard for copy/pasting, even the non-IT staff.

But this is just the tip of the iceberg, and most people still need the mouse to select the text in the first place (instead of using control-shift-arrows or shift-end/Home). Most people also backspace individual typos instead of control-backspacing and retyping the whole word. Many also use the mouse to switch between active windows instead of alt-tabbing.

It is hard to explain to people how something so simple as using the mouse wastes a lot of time that most people don’t even recognize. It seems like no time at all, but I can guarantee you that most people waste an hour or even more every single day this way. Wouldn’t you rather do something else with that time, either slack off or get more work done?

Navigating text with your keyboard

For how simple it is a surprising amount of people do not make use of the basic, default options that your computer gives you: Navigating text efficiently.

Almost every text editor allows you to do these simple things that still save you hours of time over the course of your life:

Control-Backspace / Control-Delete will eradicate the left/right word without the need to individually backspace each letter.

Shift plus your arrow keys lets you select text with the keyboard, add control in there and you can select whole words. Use shift and Home/End to select a whole line, use them without shift to jump to the end or start of a line. This way you can press Home-Shift-End-Backspace and a whole line is gone in a second.

Control-UpArrow will jump up a Paragraph in many text editors, something that I don’t use much but it’s a little faster than using just the UpArrow.

Control-F allows you to find words, it also allows to quickly jump down to a specific part of a website or long document when you know what you are searching for. Control-H lets you open the same window and replace words / phrases in most pograms.

Control-Home/End allow you to jump to the beginning/end of the document which is very useful if you went back to correct a sentence or something and then want to continue writing at the end of the document.

Navigating your browser (for beginners)

Almost everyone I know relies on the mouse to use their browsers, it seems like hardly everyone even knows you can use Control-L to jump to the search / URL bar and type up a website URL.

Control-T will open a new tab

Control-W will close the current tab.

Control-1 through 8 will cycle through open tabs, control-9 will always jump to the last open tab to the very right.

Control-PageUp/Down will cycle through your open tabs, just PageUp/Down will allow you to scroll the page.

Speaking of scrolling the page: You can scroll down by just pressing the space bar which is easily the most convenient way to scroll down while your other hand is busy…holding the water bottle that you use to hydrate well enough and be more responsible than your peers.

Control-R reloads the page and is a little easier to reach than F5 which does the same thing.

Your URL bar is also your search bar, if you type

www.google.com into your search bar to then reach for the mouse, click the already open search bar, then type the word you were actually looking for please just stop doing that.

Navigating your browser (advanced)

This requires you to get a little (chrome) plugin called

Vimium and it’s the best thing since sliced bread.

It allows you to navigate, scroll, find and click links on the site all without using your mouse. If you are aware of how multi-shortcuts work in IDEs like VS/VSCode/Jetbrains then it will be second nature to you, if not it’s quite easy to get the hang of it:

A shortcut like “Ctrl-KD” means you will press control, keep it down, then press K, then D and your code will be beautifully formatted if you happen to be in Visual Studio. This is confusing at first, but it’s the greatest thing that ever happened in my life because it means that almost all functions in most programs I use now come with shortcuts and I can remember those I need most frequently. Then, if I really don’t remember something I can always go back to using the mouse, aimlessly searching through windows, menu bars, option choices until I figure out where that stupid little function is hiding.

With Vimium you simply press “F” and the page looks like this:

Then you can simply take a look at the link you want to open, press “P” to find out why your wife has grown all cold with you and why she is much nicer to the neighbor who knows how to fix actual real life things and probably can’t use a keyboard as well as you can.

There are other great shortcuts, but this combined with the beginner section is what I use the most. The other one is that j/k will allow you to smoothly scroll up and down the page, that’s quite nice whenever the space bar is too fast and too furious.

Your Windows Explorer is just like a web browser

One thing that many people don’t realize is that your Windows Explorer can be navigated just fine with the keyboard as well.

WindowsKey-E opens the thing.

Using tab, shift, arrow keys you can select files, delete them, use F2 to rename.

With those out of the way it’s time to become a console wizard

I don’t know if you ever had the luxury of watching a console wizard in action, those people who will randomly say things like “yeah, just cd into that folder and run the build.ps1 and the errors should resolve”.

They do not quite grasp why that sounds confusing to many people, even hardened developers. I mean, if you don’t cd into that folder, then how do you even work?

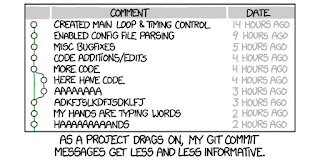

A pipe isn’t something you light after work, you use it during work to chain up commands. Sure, Git has some GUI editors but really why would you? Just open up a powershell or cmd, type git commit and don’t forget to add a descriptive commit message like “fixed the bug”.

If you are in VS Code you get an integrated terminal which sounds pretty useless until you start using it. I’m on a German keyboard layout so it takes just a press of Control-Ö to open it, easy to remember as the German word for terminal is Ökonsole. It’s very environmentally friendly, Control-P will open the command “P”alette where you can quickly access all common features of VS Code.

Tab will serve as an auto-complete for console commands, pressing tab multiple times toggles between the options and shift-tab goes back an option if you were thinking too quickly again and need to backtrack a little.

Using the UpArrow gives you the last used command so you can fix your stupid typo and if you accidentally committed your changes to the master branch you can just use git -unfuck -everything and it will all work without any rebasing or merging.

Avoid preaching, anyone who’s ready to convert will come and ask

The simple truth about life in general, keyboard usage, in particular, is this: Your words won’t change anyone’s opinion. If you read this far it’s not because of my words, but because you previously watched someone do keyboard wizardry and realized how quick it can be.

You were already curious and all I did was to show you the way, lead you astray and now it’s happened and you don’t look at the other gender with the same eyes anymore. They don’t react to your touches the way your keyboard does, they don’t understand you the way your computer does.

Congratulations, you have completed the journey, and even if your beard isn’t grey yet or you can’t even grow one you have achieved sufficient wisdom to be called a Greybeard. Go live in a mountain somewhere and wait until those who seek wisdom come to you.